Maintaining code quality is challenging, and maintaining it at scale can become a never-ending task. In older software development lifecycles, a feature would be developed, pass a set of tests, and be integrated into the main codebase.

Product-level tests would take place later, so some defects would be detected unnecessarily late, and patches would be required. Patches will still happen, but unnecessary ones cost unnecessary time and money.

In this article, Danni Youziel from Developer Experience discusses why we integrated automated quality gates into the initial stages of our software development cycle. The purpose is to demonstrate how code quality can be maintained at scale and increase developer productivity.

A prologue

By 2021, our organisation had over 100 microservices and more than 30 development teams.

We like our developers to move fast without breaking things.

Continuous integration is instrumental to that. In this article we are interested in the quality controls in the build pipeline; the point before a feature is merged into a service.

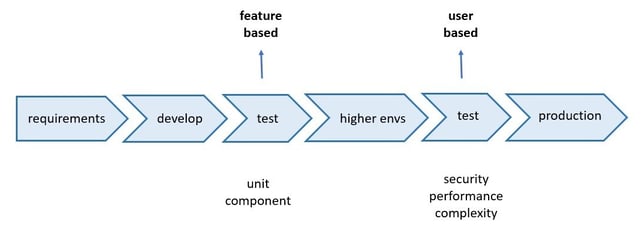

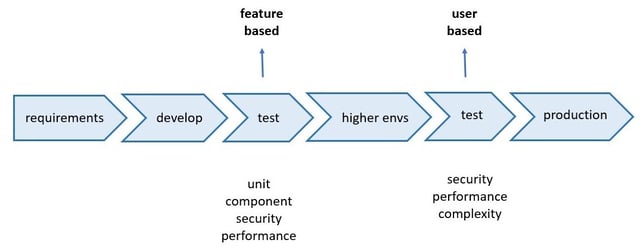

Previously, testing at this stage was functional. Unit and integration tests focused on what the feature would do in each scenario. In higher environments, the new feature would also need to integrate with other services and their new features.At this level, a different type of testing is needed, one that evaluates the characteristics of the system.

This includes non-functional characteristics such as security, performance, or complexity testing. Martin Fowler’s article Elephant in the Architecture gives great insight into these.

Consider a feature required for open banking/data. In this example, a customer should be able to get quotes for a mortgage from multiple brokers. A developer’s priority would be to ensure a customer gets up to date and correct information. However, a security engineer would want tests to make sure this feature satisfies security and compliance standards. A quality engineer would have other concerns.

The preferred approach was to rethink the testing model. To understand what parts of the non-functional tests could be performed earlier and shift those tests left in the development cycle.

Fast feedback, slow pipelines

Staying with the mortgage feature, the security requirements in the early stages of the development lifecycle could be checking for potential points for SQL injection. Similarly, a quality requirement may be checking the side effects of any race conditions. These could be written into the feature acceptance criteria but this is neither feasible nor fast. The alternative is to automate using static analysis tools. Tools that would analyse uncompiled code for security vulnerabilities or code complexity.

One of the benefits of Jenkins as a CI tool is its wealth of plugins. Many leading Security Control Accessor (SCA) tools have out of the box integration with Jenkins. Using these plugins enables results to be captured and parsed visually for our developers via a managed script. In 2018 we had around 40 services, and up to 800 merges per day. One of our earlier SCA’s was Fortify.

| Average size | Average Fortify SCA scan time | Total lines of code (TLOC) |

| Extra large | 12.6 hours | > 1m |

| Large | 2.8 hours | > 400k |

| Medium | 40 minutes | > 100k |

The duration of each scan depended on the complexity and size of the code. The scan times, as seen in the table, meant scans had to run overnight and any issues were resolved the next day.

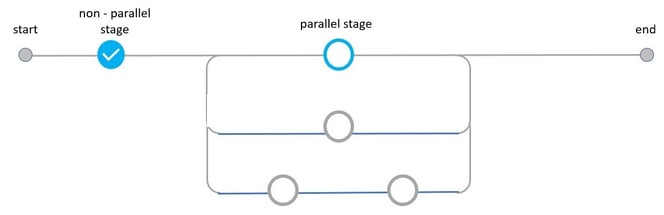

The process picked up defects earlier in the cycle, however the scans were not really acting as gates. They needed to block builds immediately if any issues are found, until they were resolved by the developer. The scans also needed to be fast so they didn’t become a bottle neck in the pipeline.

A new approach

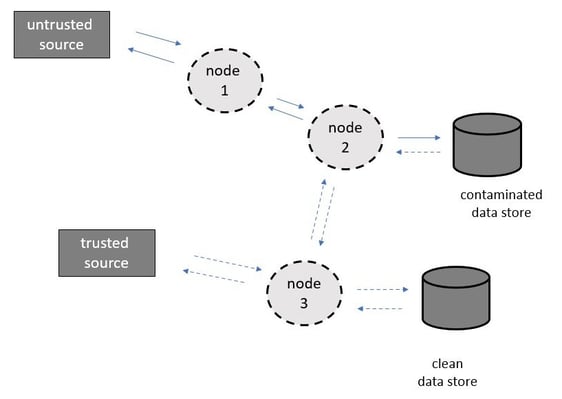

Each SCA has its own way of scanning code. For example, when looking for SQL injection, a query can be run to track code sanitisation between user input and data store (source and sink). There are also tools that allow for incremental scans, i.e. they will only scan sections of the code that have changed.

Therefore, we took the decision to split scans. Pull requests could undergo incremental scans before being merged. Full cycle builds would have full cycle scans overnight.

Another advantage of Jenkins is its fast fail approach on parallel branches. Remember, the quality gates will introduce extra stages in the build process. We wanted quality gates for code complexity, security vulnerabilities, code coverage and other non-functional requirements. We also wanted developers to push often and early. As the result of one gate did not necessarily affect another those gates could be put in parallel.

A tradeoff was the cost in terms of resource management. However, this could be justified against detecting defects later in the cycle

Fine-tuning the gates we have today

One of our current Static Application Security Testing (SAST) tools is CheckMarx. It allows incremental scans, private scans, and can be integrated into our Jenkins pipeline. It analyses and classifies problems with different severities: low, medium, and high. As we have worked with the tool, we have fine-tuned it as well as our own ways of working.

Static analysis tools are not perfect and can produce false positives. Giving developers the ability to mark issues as non-exploitable was important to their workflow. In addition, it was important to have an internal security team available to remediate and track any findings of great concern.

Development teams also collaborated with security teams to analyse projects and make use of file exclusion lists. This meant scans of microservices' are necessary and concise. JSON files do not need a security scan, but generated code files most likely would. In our development teams, analysing which files to scan has seen quality maintained with build times reduced by up to 70%.

Conclusion

Our goal was to detect defects earlier in the development cycle without overwhelming developers. The approach was to introduce non-functional tests as a requirement in the build pipeline, but we needed to ensure developers were not overwhelmed with excessive build times. In developer experience automation is the aim, but without human collaboration the aim does not always hit the mark. Our developers learned a lot about quality at scale from feedback from the tools. We learned a lot about quality at scale through feedback from our developers.